3D Parkour Game Dev Log #01 :: Starting the AI System

Posted on

Last updated

Series Introduction

Hey! This is the beginning of a dev log series for my latest game project that I’ve been working on for around 2 months. I’ve decided that it’s time to stop making (almost) everything only behind closed doors (not only with this project!) and instead try to document my progress, challenges and potential solutions to such problems.

Edit: I've sadly put this project "on-hold" as I've decided to focus on creating and fully realizing a smaller scale project before spending years on a larger scale project such as this. I still want to revisit this one as I have a lot of ideas for it, but it'll have to wait for now... :(

Project Introduction

Alright, so before talking about the AI stuff I’ve been working on for the past month, I should probably introduce what my current idea for this game is? Well, my idea is still free form, but for the time being, I’m trying to head towards an

Showcase of the player's current movement capabilities

Showcase of the AI agents current behavior

It's inspired by various rogue-lites such as Hades, Risk of Rain 2 and 20 Minutes Till Dawn (so indirectly Vampire Survivors too) thanks to their rich progression systems. But also Mirror's Edge (+ its sequel, Catalyst) for it's amazing parkour movement, that I absolutely adore still to this day.

Old prototype (ported from top-down 2D to 3D) of a perk system, basic enemy knockback and hordes

Anyway, as you can see, it’s still _VERY_ early days for the project, apparent by the complete lack of animations or visual polish but that’s because I’m working on ensuring the core gameplay is in place first, before committing time towards the presentation (visuals + audio), and that includes even temp art in my case, as that takes more time than I’d like to admit...

Focus on AI System

Current State of AI

So the reason I’ve been focusing on the AI system for the past month is because I believe it is currently the most lacking aspect of my gameplay. In fact, there practically isn’t any AI behavior, other than solely chasing the player via a navmesh and dealing damage when within a certain distance of the player. And even with that, there are many flaws:

Player moving in a simple line, with enemies spawning and forming an ever-narrow line towards the player

- The follow behavior is far too predictable, leading to easy manipulation of the agent’s positioning. They always take the shortest path, so if you outrun them for a bit and they’ll form a mostly single-file line, making it super low-effort to eliminate all the closest enemies.

Player being chased by enemies can simply climb up a wall and become unreachable

- The agents can’t do parkour like the player. This makes it trivially easy to avoid the danger of being attacked by reaching a position the AI can’t reach. This could be very drastic, depending on the level’s layout.

Player moving around some obstacles to become out-of-sight, yet enemies still perfectly target the player

- Even if line-of-sight is broken, the agents always target the player. This isn't wrong, and it's often desired, especially for the rogue-lite genre, that the agents always chase the player. Sadly, this restricts play-styles such as stealth from being a viable option, which I would like to allow.

This led me to decide that before working on adding other aspects of the game loop, such as the perk system, I should first get an AI system in place, that will ideally infer the rest of the game mechanics.

Research and Prototypes

With that settled, I began by researching into various AI techniques/aspects before beginning any prototypes, such as behavior trees, utility AI, navigation systems, line-of-sight detection, etc. Whilst looking into ways to improve AI movement to look more natural in larger groups, I found various resources specifically for the RTS genre, where large groups of units guided by players move across the map in unison in a pretty natural way. After looking into it some more, I noticed a few of them used boids to guide the local avoidance of the group, but I wondered if this could also drive the aimless movement when no target was visible.

Early version of enemies movement being driven by boids. There is no nav mesh usage yet, just rigidbodies to prevent clipping through the level's obstacles

So I spent the next week and a half on a prototype to do exactly that. I had a barebones version working, but quickly faced performance limitations because of the sub-optimal lookups of out-of-range boids. After implementing a semi-efficient octant grid (uses a struct per octant, a more optimized version would simply store the octant data in a flat array) for spatial partitioning to reduce the lookup behavior of the boids, I could now maintain running a few thousand no problem.

A collection of clips captured from various entertaining bugs whilst implimenting the usage of the navmesh

I spent a few more days trying to recreate Unity’s AI agent’s movement behavior to restrict the boid-driven movement to the navmesh before realizing I could use the very component I was trying to recreate, via the function: AI.NavMeshAgent.Move() (doc link). This allowed me to apply a relative movement offset to the agent. And just like that, basic boid movement via AI agents. Even when the player is visible, they’ll still use the boid’s movement with a movement bias towards the player via a navmesh path. It functions remarkably well and offers a significantly superior experience than the rudimentary AI path-finding that was previously in the game.

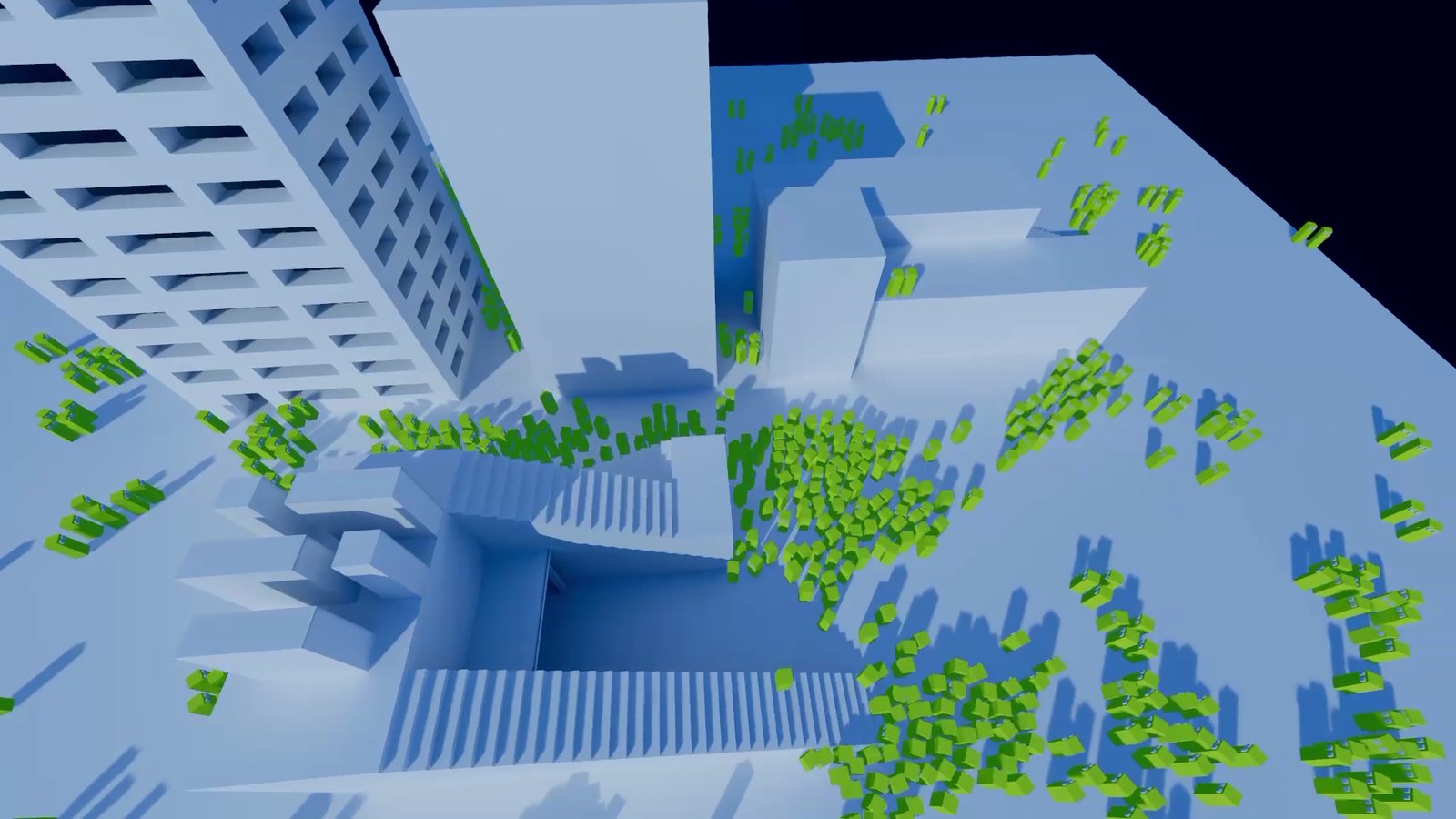

Enemies movement being driven by boids with a bias towards the "player" (camera gizmo icon) with nav mesh obstacle avoidance

It still has various avenues for optimization, such as only path-finding to the player at a much lower rate depending on the distance from the player + distance the player has travelled since the last successful path. Also, boids in the same swarm could most likely share the same general path, assuming they’re all the same archetype. I didn’t add these since I wanted to first explore more decision-making systems.

Initial proof-of-concept enabling the enemies to perform parkour

Decision Making Design

I had already used behavior trees previously, and even followed (+ extended with UI to see properties values) a great YouTube tutorial on creating a node editor for them. I found them to be quite tedious to work with, even in combination with a node editor, simply because I wanted to have dynamic changes to the agent’s behavior based on conditions/time, which is possible but seems to require more work to get up and running.

I had heard of utility AI before but assumed at the time it was intended for non-gameplay AI behavior, probably because of the word “utility”. However, after looking into them, I found the approach far more comprehensive and easier to reason about, so I started working on a basic implementation of it after prototyping the architecture on paper.

As I was looking around some more for additional details on utility AI, I came across Bobby Anguelov’s awesome YouTube channel full of various game dev lectures, but specifically his two game AI talks (intro to AI talk, behavior selection talk) really captivated me to try out his proposed approach.

Current AI System Progress

Which leads to now, I’ve gotten most of the core systems in place, including lots of visual debugging + UI tools/systems to help make the AI easier to create and debug in the future. As you can see below, I have made most of the systems easily changeable in real-time through the editor, enabling plug-and-play testing.

A group of agent's pathfinding to a target destination, whilst producing audio stimulants representing footsteps

Example visual simulant being detected by an agent's visual sensors

Demo of realtime, inspector-driven changes being applied instantly to the AI agent's vision sensor

Goals for the Next Few Weeks

I’m going to be focusing on fleshing out the player’s movement capabilities some more in combination with designing a focused sandbox level to test with. Once that is in place, I’m going to block out a very rough set of city blocks with some interiors (empty floors with stairs, elevators and windows) to develop the enemy AI to scale to larger swarms and also realize their own movement to comparably match the player. I’ll also start experimenting with aerial pathfinding, probably by generating a graph of bounding boxes.